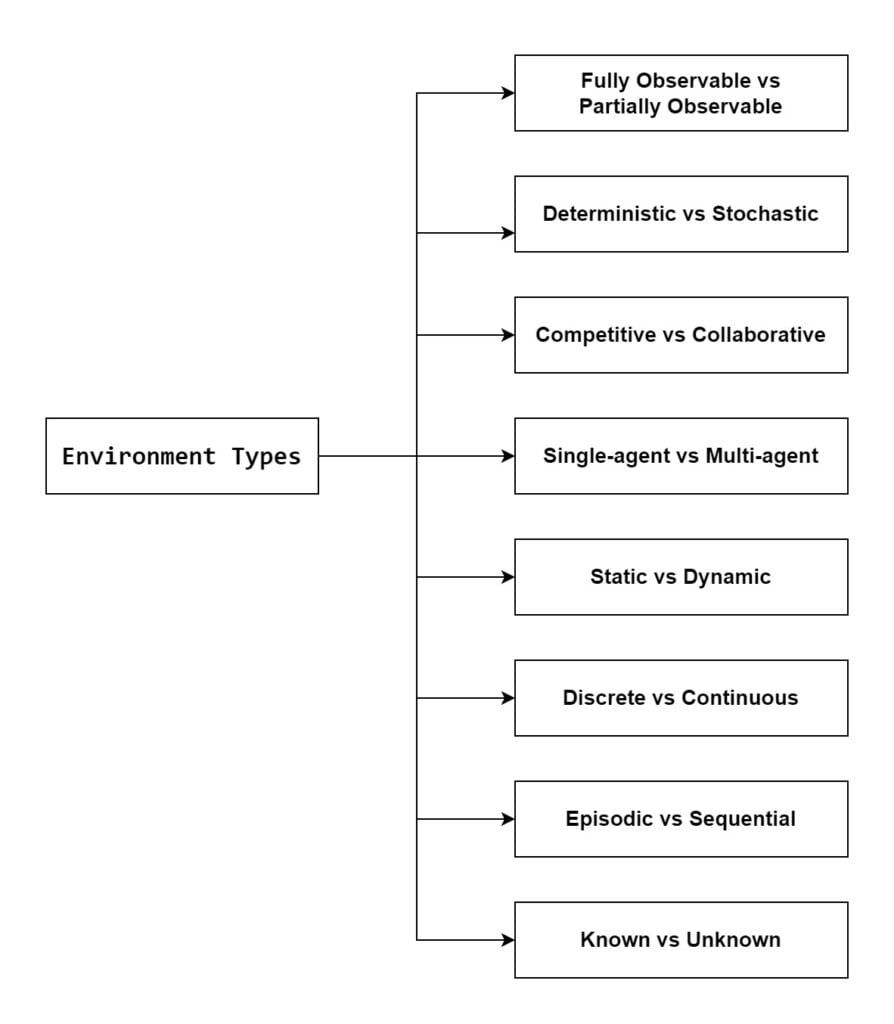

In artificial intelligence, the environment is the agent’s surroundings. The agent receives input from the environment via sensors and outputs to the environment via actuators. There are several kinds of environments:

- Fully Observable vs Partially Observable

- Deterministic vs Stochastic

- Competitive vs Collaborative

- Single-agent vs Multi-agent

- Static vs Dynamic

- Discrete vs Continuous

- Episodic vs Sequential

- Known vs Unknown

1. Fully Observable vs Partially Observable

- When an agent sensor can perceive or access the whole state of an agent at any point in time, the environment is said to be fully observable; otherwise, it is partially observable.

- Maintaining a completely visible environment is simple since there is no need to keep track of the surrounding history.

- When the agent has no sensors in all environments, the environment is said to be unobservable.

Examples:

- Chess – the board and the opponent’s movements are both fully observable.

- Driving – the environment is partially observable because what’s around the corner is not known.

2. Deterministic vs Stochastic

- A deterministic environment is one in which an agent’s present state and chosen action totally determine the upcoming state of the environment.

- A stochastic environment is unpredictable and cannot be totally predicted by an agent.

Examples:

- Chess – In its current state, a coin has just a few alternative moves, and these moves can be determined.

- Self-Driving Cars– The activities of self-driving cars are not consistent; they change over time.

3. Competitive vs Collaborative

- When an agent competes with another agent to optimize output, it is said to be in a competitive environment.

- When numerous agents work together to generate the required result, the agent is said to be in a collaborative environment.

Examples:

- Chess – the agents compete with each other to win the game which is the output.

- Self-Driving Cars – When numerous self-driving cars are located on the road, they work together to prevent crashes and arrive at their destination, which is the intended result.

4. Single-agent vs Multi-agent

- A single-agent environment is defined as one that has only one agent.

- A multi-agent environment is one in which more than one agent exists.

Examples:

- A person left alone in a maze is an example of the single-agent system.

- Football is a multi-agent game since each team has 11 players.

5. Dynamic vs Static

- A dynamic environment is one that changes frequently when the agent is doing some action.

- A static environment is one that is idle and does not change its state.

Examples:

- A roller coaster ride is dynamic since it is in motion and the environment changes all the time.

- An empty house is static because nothing changes when an agent arrives.

6. Discrete vs Continuous

- When there are a finite number of percepts and actions that may be done in an environment, that environment is referred to as a discrete environment.

- The environment in which actions are performed that cannot be counted i.e. is not discrete, is referred to be continuous.

Examples:

- Chess is a discrete game since it has a finite number of moves. The amount of moves varies from game to game, but it is always finite.

- Self-driving cars are an example of continuous environments since their activities, such as driving, parking, and so on, cannot be counted.

7. Episodic vs Sequential

- Each of the agent’s activities in an Episodic task environment is broken into atomic events or episodes. There is no link between the present and past events. In each occurrence, an agent collects environmental input and then takes the appropriate action.

- Examples- Consider the Pick and Place robot, which is used to detect damaged components from conveyor belts. In this case, the robot (agent) will make a choice on the current section every time, implying that there is no dependency between past and present decisions.

- Previous decisions in a Sequential environment can influence all future decisions. The agent’s next action is determined by what action he has taken before and what action he is expected to take in the future.

- Examples – Checkers- A game in which the previous move affects all following movements.

8. Known vs Unknown

- Although known and unknown are not features of an environment, they are an agent’s state of knowledge when performing an action.

- The effects of all actions are known to the agent in a known environment. In order to perform an action in an unknown environment, the agent must first understand how it operates.

- It is quite feasible for known environments to be partially observable and for unknown environments to be fully observable.

Task Environments and their Characteristics

Down below are listed the properties of several familiar environments. It should be noted that the qualities are not always black and white. For example, we have listed the medical diagnosis task as single-agent because the disease process in a patient is not profitably modeled as an agent. Still, a medical-diagnosis system might also have to deal with recalcitrant patients and skeptical staff, so that the environment could have a multiagent aspect. Furthermore, medical diagnosis is episodic if the task is seen as choosing a diagnosis from a list of symptoms; the problem is sequential if the effort includes suggesting a series of tests, assessing progress over the course of therapy, dealing with several patients, and so on.

| Task Environment | Observable | Agents | Deterministic | Episodic | Static | Discrete |

|---|---|---|---|---|---|---|

| Crossword Puzzle | Fully | Single | Deterministic | Sequential | Static | Discrete |

| Chess with a clock | Fully | Multi | Deterministic | Sequential | Semi | Discrete |

| Poker | Partially | Multi | Stochastic | Sequential | Static | Discrete |

| Backgammon | Fully | Multi | Stochastic | Sequential | Static | Discrete |

| Taxi Driving | Partially | Multi | Stochastic | Sequential | Dynamic | Continuous |

| Medical Diagnosis | Partially | Single | Stochastic | Sequential | Dynamic | Continuous |

| Image Analysis | Fully | Single | Deterministic | Episodic | Semi | Continuous |

| Part-picking Robot | Partially | Single | Stochastic | Episodic | Dynamic | Continuous |

| Refinery Controller | Partially | Single | Stochastic | Sequential | Dynamic | Continuous |

| English Tutor | Partially | Multi | Stochastic | Sequential | Dynamic | Discrete |

We have not included a “known/unknown” column because, as explained earlier, this is not strictly a property of the environment. For some environments, such as chess and poker, it is quite easy to supply the agent with full knowledge of the rules, but it is nonetheless interesting to consider how an agent might learn to play these games without such knowledge.