The study of rational agents is referred to as artificial intelligence. A rational agent might be any decision-making entity, such as a person, company, machine, or software. It takes the optimal action based on previous and present percepts (agent’s perceptual inputs at a particular moment). An agent and its environments comprise an AI system. The agents interact with their surroundings. Other agents may exist in the environment.

Before we proceed, we should understand sensors, effectors, and actuators.

- Sensor: A sensor is a device that detects changes in the environment and transmits the data to other electrical devices. An agent uses sensors to monitor its surroundings.

- Actuators: Actuators are machine components that turn energy into motion. Actuators are exclusively in charge of moving and controlling a system. An actuator can be anything from an electric motor to gears and rails.

- .Effectors: Effectors are devices that have an impact on the environment. Legs, wheels, arms, fingers, wings, fins, and a display screen are all examples of effectors.

What is an Agent?

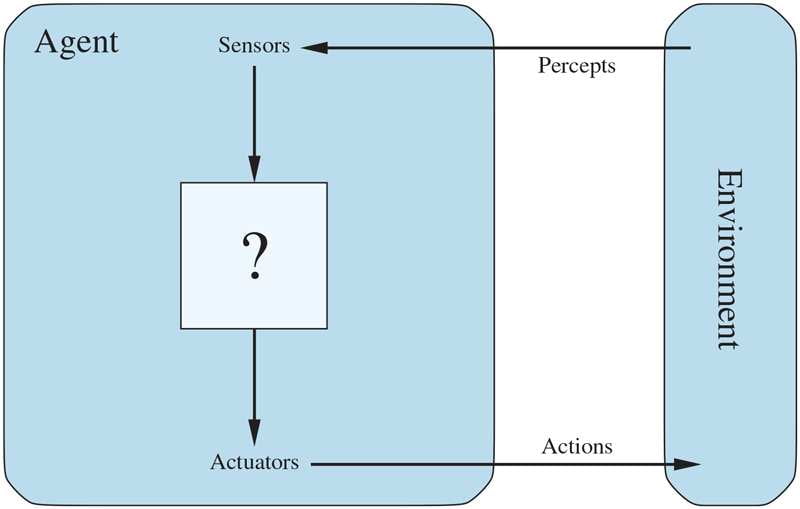

An agent is anything that perceives its surroundings using sensors and acts on that perception through actuators. An Agent is constantly perceiving, thinking, and acting.

An agent is defined as:

- detecting its environment via sensors and

- influencing the environment via actuators

Examples of Agent

- Human-Agent: A Human-agent has sensors such as eyes, hearing, and other organs, as well as actuators like hands, legs, mouth, and other body parts.

- Robotic Agent: A robotic agent has cameras and infrared range finders that operate as sensors, as well as numerous motors that act as actuators.

- Software Agent: A software agent has keystrokes, file contents, received network packages that operate as sensors and show on the screen, files, and transmitted network packets that act as actuators.

As a result, the environment around us is replete with agents such as thermostats, cell phones, cameras, and even ourselves.

Structure of an AI Agent

The objective of AI is to create an agent program that performs the agent function. To realize the structure of Intelligent Agents, we must first become aware of Architecture and Agent programs. It can be regarded as follows:

Agent = Architecture + Agent ProgramThe architecture is the mechanism on which the agent operates. It is a device having sensors and actuators, such as a robotic car, a camera, or a computer.

An agent function is a mapping between a percept sequence (the history of everything an agent has perceived up to that point) to an action.

f: P* → AAn agent program is a program that implements an agent function.

Intelligent Agents

An intelligent agent is a self-contained entity that uses sensors and actuators to accomplish tasks in its environment. To attain their aims, intelligent agents may learn from their environment. An intelligent agent is a thermostat.

The following are the four key rules for an AI agent:

- Rule 1: An AI agent must be able to comprehend its environment.

- Rule 2: Decisions must be made based on the observation.

- Rule 3: A decision should be followed by an action.

- Rule 4: An AI agent’s actions must be rational.

Rational Agent

A rational agent is one who has defined preferences, models uncertainty and behaves in such a way that its performance measure is maximized with all feasible actions.

A rational agent is said to act rationally. AI is concerned with the development of rational agents for application in game theory and decision theory in many real-world contexts.

The rational action is particularly crucial for an AI agent because, in the AI reinforcement learning algorithm, an agent receives a positive reward for each best feasible action and a negative reward for each erroneous action.

Rationality

Rationality is the state of being rational, sensible, and possessing sound judgment.

Rationality is concerned with predicted behaviors and outcomes based on what the actor perceives. Doing activities in order to get useful knowledge is a key aspect of reason.

An agent’s rationality is judged by its performance. What is rational at any given time depends on four things:

- The performance measure defines the criterion of success.

- The agent’s prior knowledge of the environment.

- The actions that the agent can perform.

- The agent’s percept sequence to date.

Types of Agents

Agents are classified into five types based on their presumed intellect and capability:

- Simple Reflex Agents

- Model-Based Reflex Agents

- Goal-Based Agents

- Utility-Based Agents

- Learning Agent

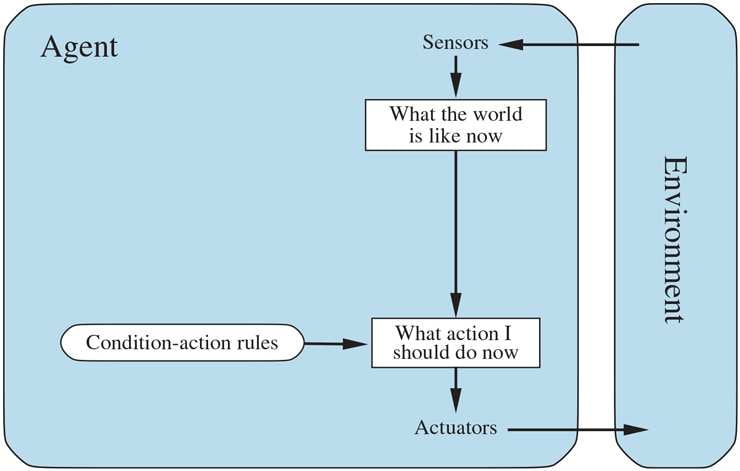

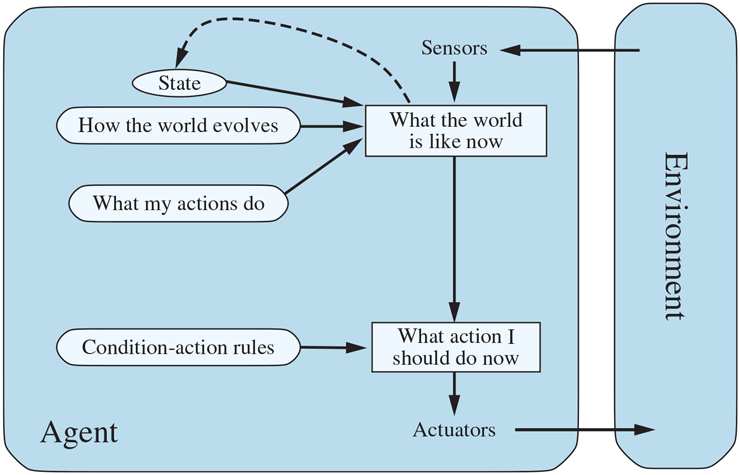

Simple Reflex Agents

- Simple reflex agents are the most basic agents. These agents make judgments based only on their current perceptions, ignoring previous perceptions.

- These agents can only thrive in a fully observable environment.

- The Condition-action rule is used by the Simple reflex agent, which implies it maps the current state to action. A Room Cleaner agent, for example, functions only if there is filth in the room.

- Problems with the simple reflex agent design method:

- They have a low level of intellect.

- Most of the time, they are too large to generate and store.

- Not adaptable to environmental changes.

Model-Based Reflex Agent

- The model-based agent may function in a partially visible environment and follow the situation.

- There are two critical components in a model-based agent

- Model: Because it is information about “how things happen in the world,” it is referred to as a Model-based agent.

- Internal State: It is a perceptual history-based representation of the current situation.

- These agents have the model, “which is knowledge of the world,” and they execute actions based on it.

- Updating the agent state needs the following information:

- How the world evolves

- How does the agent’s action affect the world?

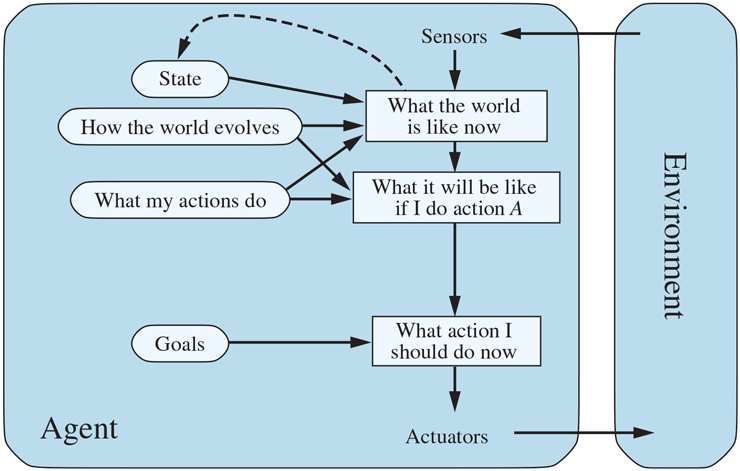

Goal-Based Agents

- Knowledge of the present state environment is not always enough to decide what to do for an agent.

- The agent must be aware of its goal, which identifies desired scenarios.

- Goal-based agents enhance the capabilities of model-based agents by providing “goal” information.

- They select an action in order to attain their goal.

- These agents may have to analyze a broad list of alternative behaviors before judging whether or not the goal has been met. Such evaluations of many scenarios are known as searching and planning, and they make an agent proactive.

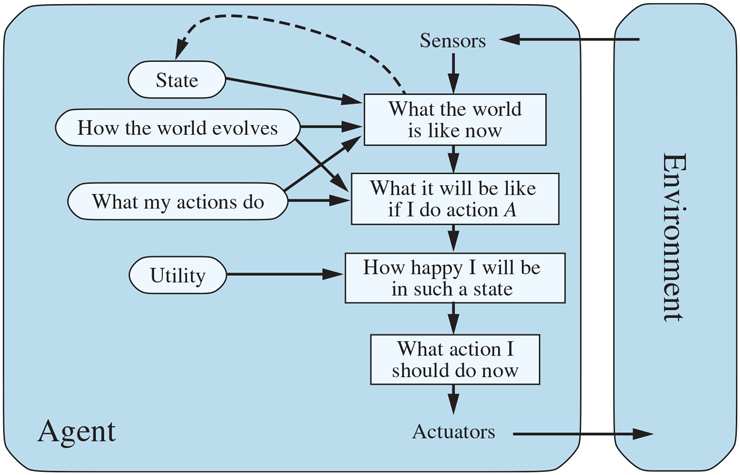

Utility-Based Agents

- These agents are similar to goal-based agents, but they include a utility measurement component that distinguishes them by giving a measure of success at a particular state.

- A utility-based agent acts not just on goals but also on the optimal approach to attain those goals.

- The Utility-based agent is useful when there are numerous feasible actions and an agent must select the best one.

- The utility function converts each state to a real number in order to determine how effectively each action meets the goals.

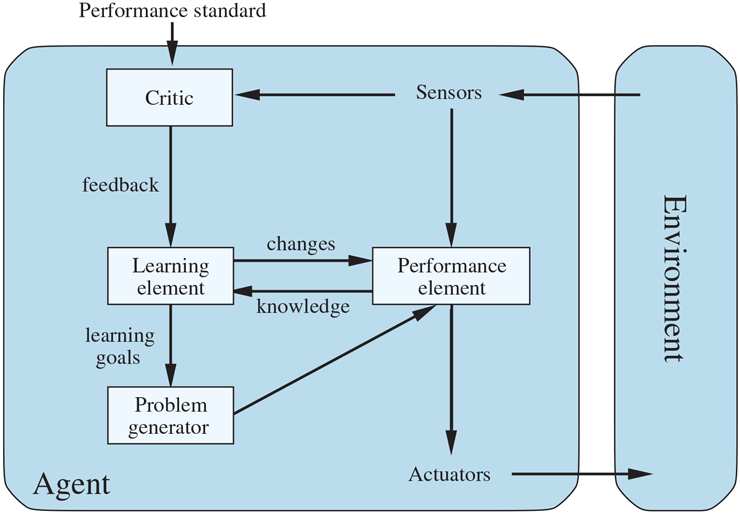

Learning Agents

- In AI, a learning agent is a sort of agent that can learn from previous experiences or has learning capabilities.

- It begins with fundamental information and progresses to being able to respond and adjust naturally via learning.

- Four conceptual components make up a learning agent.:

- Learning Element: It is in charge of producing improvements through learning from the environment.

- Critic: The learning element receives input from the critic, which explains how well the agent is performing in comparison to predefined performance criteria.

- Performance Element: It is in charge of deciding on external action.

- Problem Generator: This component is in charge of recommending actions that will lead to fresh and educational experiences.

- As a result, learning agents can learn, assess performance, and seek new methods to enhance performance.